Slack is a great tool for collaboration, but it can be hard to keep up with all the conversations. Wouldn't it be great to have someone summarize the channel for you, giving you highlights and answering questions about the details?

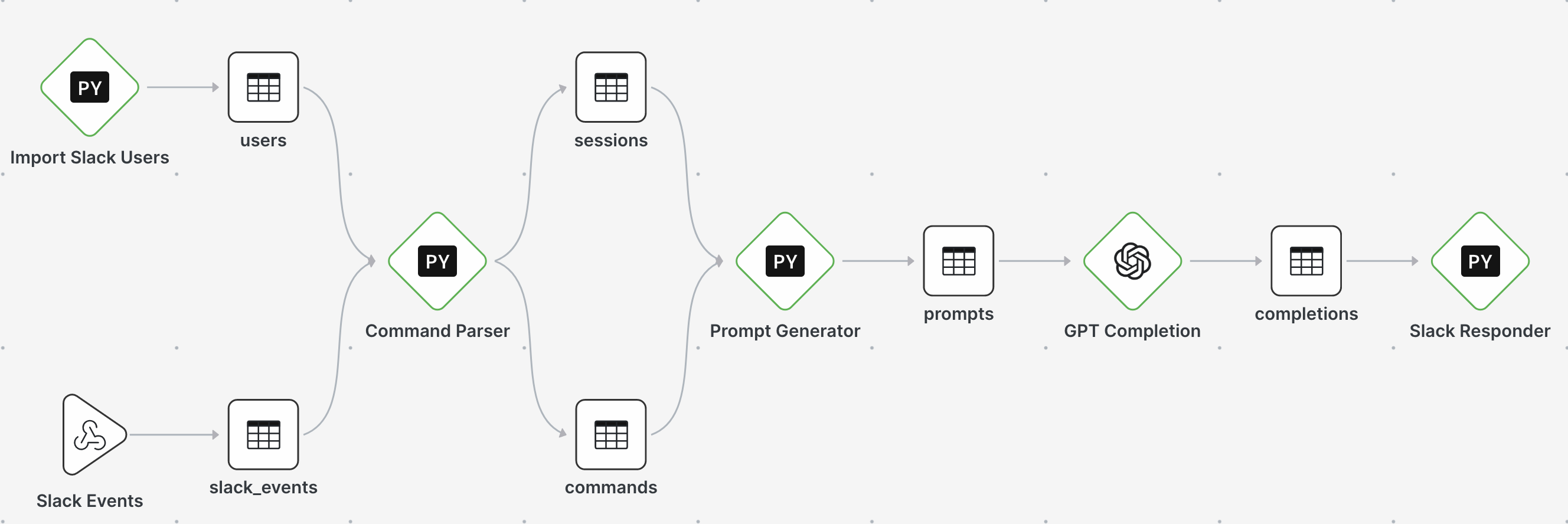

In this guide, we will explore building a Slackbot that uses OpenAI's GPT-3 to summarize Slack channels. To quickly build and deploy the bot we'll use Patterns, which provides storage, webhook listeners, serverless execution, Slack & OpenAI integrations, and an execution scheduler.

The purpose of this guide is to give a broad overview of what is required to build the bot.

Source: For detailed source, see: Slack Summarizer on Github

Try it: To use the bot in your own Slack workspace, you can clone it here: Slack Summarizer in Patterns

Interaction design

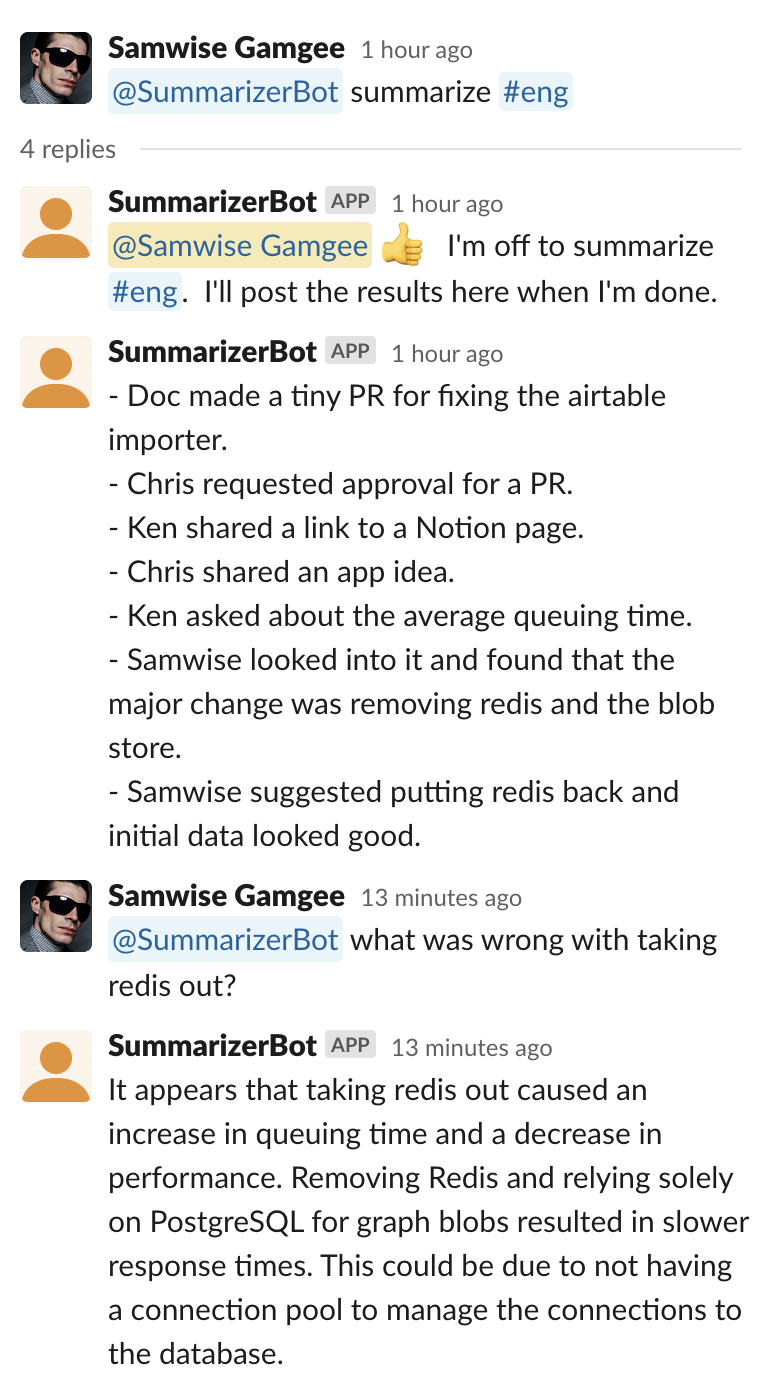

Here's how our bot should work:

- Mention: A user @mentions the bot, asking it to summarize a #channel

- Quick Reply: The bot immediately replies, creating a Slack thread to interact with the user

- Fetch Messages: The bot makes requests to Slack to get the relevant channel history

- Prompt Creation: The bot constructs a prompt using the channel messages and sends it to OpenAI's GPT-3 model

- Reply to User: The response from OpenAI is sent back to the user in Slack

- Summary Interaction: The user may continue to @mention the bot in the thread, asking for more details.

Prompt Design

Prompts are the API of GPT-3, and the community is still exploring the best ways to create them to get the best results. Our considerations for this guide are:

- Length: the

text-davinci-003model has a limit of 4000 tokens per request. This limits the number of messages we can include in the prompt. - Structure: messages are ordered chronologically, and each message may have a list of replies.

Considering length, we will take the simplest approach: only query the last X hours of messages. This is inadequate for a real application, but it serves our purposes here. There are a variety of techniques to summarize large quantities of text, such as using embeddings to search for related information, chunking, etc. There isn't a currently a one-size-fits-all approach, but it's a very active area of research.

Considering structure and length together, we will make a minimal JSON representation of the messages; saving on our token budget, but preserving the structure. Note that our JSON does not have any time related information for a message. In our experience, the bot doesn't need it to be generally useful.

The pretty version of the below JSON is 155 tokens, while the compacted version is 59 tokens. It pays to be compact!

[

{

"u": "Marty McFly",

"t": "Hey Doc, I need your help with something",

"r": [

{

"u": "Doc Brown",

"t": "Sure Marty, what's up?"

},

{

"u": "Marty McFly",

"t": "I need to go back in time to 1955"

}

]

}

]

Channel Summarizing Prompt

We wrap the messages with processing instructions. When summarizing, the prompt looks like this:

Here are a list of messages in JSON format.

{the JSON messages}

Please summarize the messages and respond with bullets in markdown format.

Summary Interactive Prompt

When the user asks for more details about a summary, we supply the same messages but allow the user's query to be included directly in the prompt:

Here are a list of messages in JSON format.

{the JSON messages}

Please respond to this question in markdown format: {the user's question}

Working with the channel history

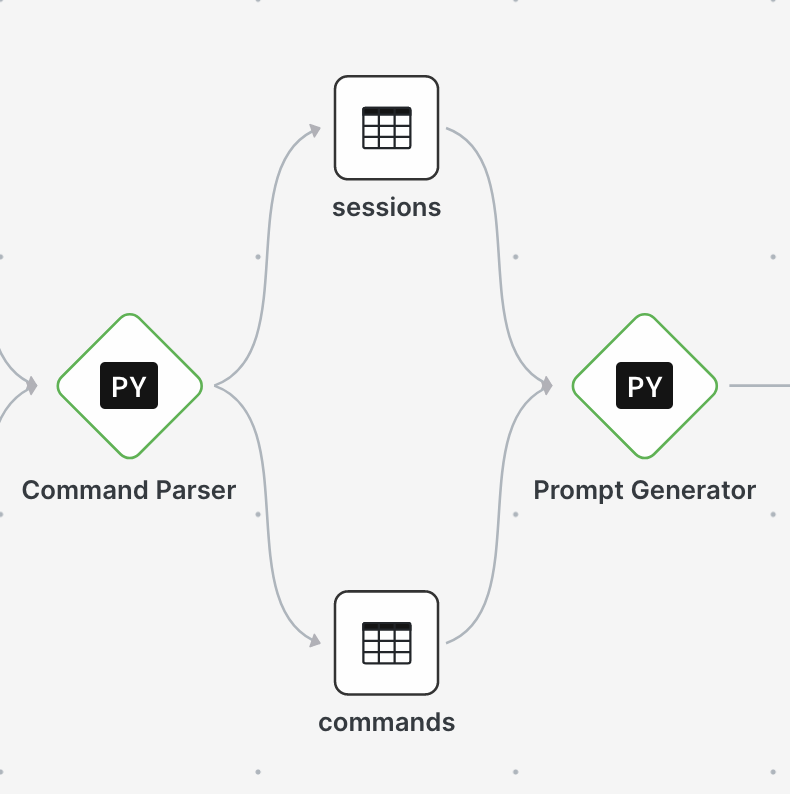

When a user @mentions the bot, we need to get the channel history. To do this, we simply ask Slack for the recent messages and threads within the channel. This can result in many API calls, each taking some time. To make this faster, we have two options:

- Store all Slack messages in a Patterns table and query against it. This would result in the fastest response time, but would require a lot of storage for things that might not ever be queried.

- Query Slack on demand but cache the results in a 'session', allowing the bot to reuse the messages for multiple interactions.

For this guide, we adopt the second approach. When a conversation is initiated with the bot, the messages are fetched from Slack and stored in a session. The session is then used for all subsequent interactions.

Replacing user IDs in Slack messages

Messages from the Slack API contain user IDs instead of user names. This allows users to change their names and still show up correctly in message history. The raw messages look like this:

{

'text': 'What do you think, <@U04F2AMBJ14>?',

'ts': '1673985851.921529',

...

}

We need to replace these IDs with the names of the user. Fortunately, we can simply ask Slack for a list of users in the workspace and use this mapping to replace the IDs in each message. This is a simple operation, but we don't want to do it every time the bot needs to make a summary; each API call will slow it down. The list of Slack users in a workspace doesn't change very often, so it's a perfect candidate for caching.

Caching user IDs every day

Patterns has two capabilities which help us quickly solve this problem:

- Built-in storage, which gives us a place to put the cache

- A scheduler, which can run our code periodically

We create a Python node called Import Slack Users which gets the list of users

from Slack and puts it into a table called users. We schedule it to run once a day at

2am UTC by giving it the cron expression 0 2 * * *.

Now we have a users table which the rest of our bot can use to map user IDs to names.

The code to do this roughly looks like:

user_table = Table("users", mode="w")

user_table.init(unique_on="id")

users_list = slack.users_list()

user_table.upsert(users_list.get("members", []))

Interacting with OpenAI and Slack

The APIs for interacting with Slack and OpenAI are well-documented and easy to use. The final piece of the bot is to issue the query to OpenAI and send the response back to the user in Slack. This implemented in this section of the graph:

Evaluation and Conclusion

The bot actually performs quite well; it does a good job with the summary and responds reasonably to follow-up questions. Like all other GPT generative uses, it suffers from hallucination and repetition.

Is the bot useful? My Slack channels are not very active, so it's not difficult to read the original content or search when I'm curious about a topic. It would certainly be more useful in a channel with a lot of activity. It could be useful in other areas, such as summarizing the week's activity and sending a digest email.

If I find myself @messaging the bot instead of simply scrolling back, I'll know that the such a bot is a winning piece of tech.