(fyi, here’s the code for everything I built below, you can clone and deploy the full app here as well.)

“AskHN” is a GPT-3 bot I trained on a corpus of over 6.5 million Hacker News comments to represent the collective wisdom of the HN community in a single bot. You can query it here in our discord using the /askhn command if you want to play around (I’ve rate-limited the bot for now to keep my OpenAI bill from bankrupting me, so you might have to wait around for your spot).

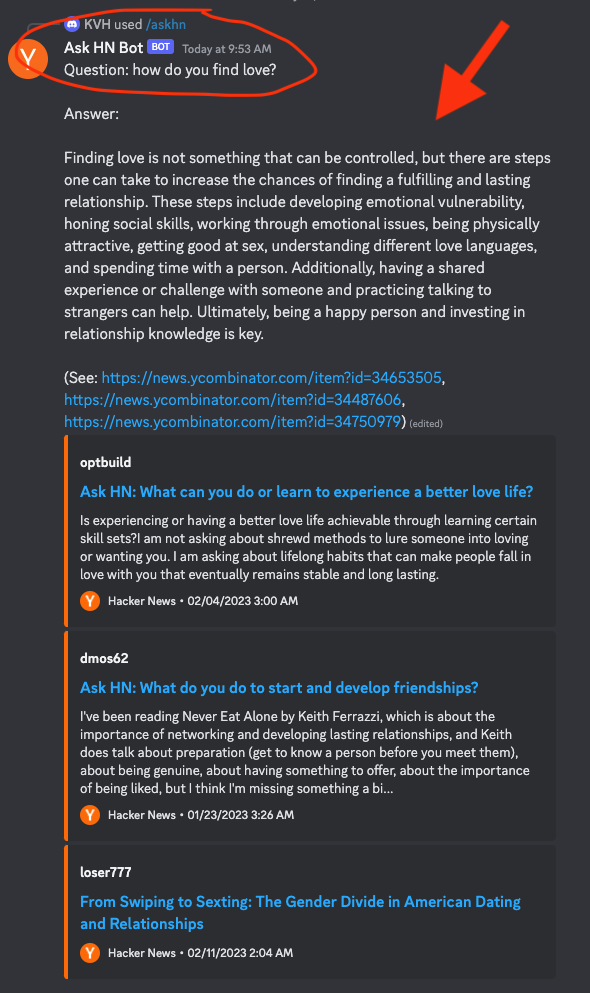

More details on how I built it below, but I found the LLM-embedded embodiment of HN’s wisdom to be an impressive and genuinely useful resource. Thousands of the sharpest minds in technology have volunteered up their expertise, wisdom, and occasional flame over the years. There are very few topics Ask HN Bot does not have a solid answer to, even the question of finding love!

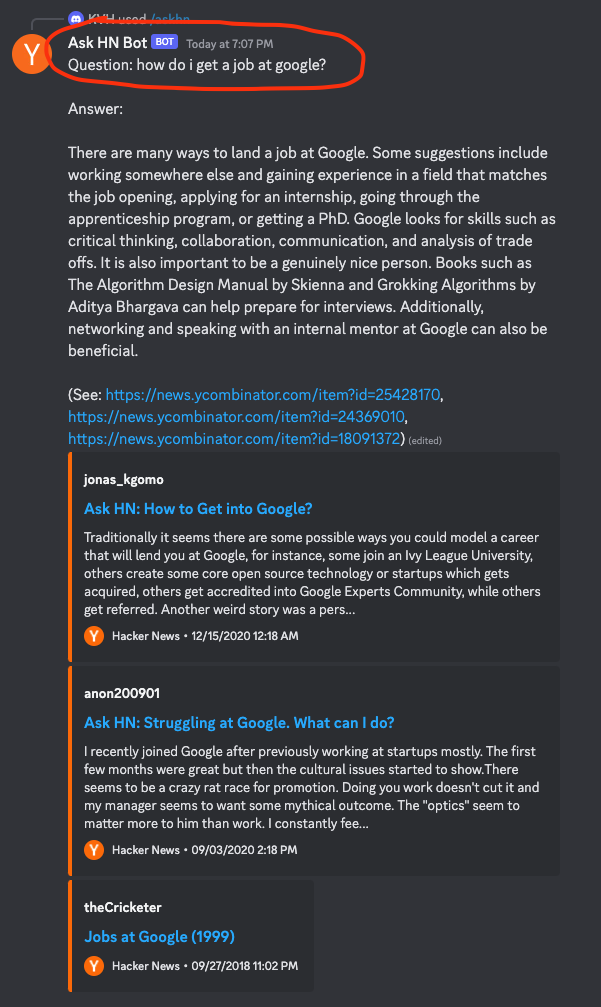

It does well with job advice:

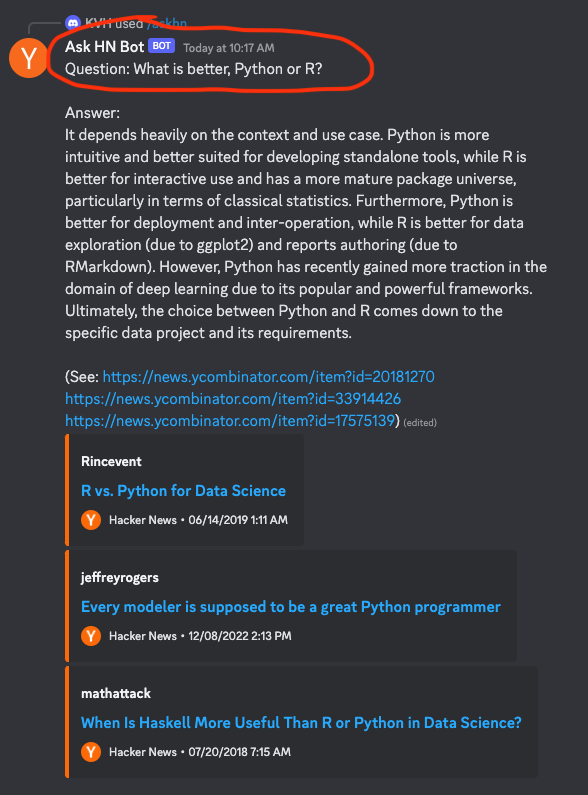

...classic flame wars:

...the important questions:

...and unanswerable questions:

The GPT-3 prompted summaries are pretty good! And the linked articles are all very relevant -- the 1500 dimensions of the OpenAI GPT embeddings are enough to slice the semantic space for this use case.

It's main failure mode is summarizing contradictory / irrelevant comments into one response, which can lead to incoherence.

How I built it

I built it in Patterns Studio over the course of a few days. Here was my rough plan at the start:

- Ingest the entire HN corpus (bulk or API) - 34.8 million stories and comments as of this writing

- Play around with filtering to get the right set of content (top articles, top comments)

- Bundle article title and top comment snippets into a document and fetch the OpenAI embedding via text-ada-embedding-002

- Index the embeddings in a database

- Accept questions via Discord bot (as well as manual input for testing)

- Look-up closest embeddings

- Put top matching content into a prompt and ask GPT-3 to summarize

- Return summary along with direct links to comments back to Discord user

This plan went ok, but results tended to be a bit to generic — I didn’t have enough of the specific relevant comment content in the prompt to give technical and specific answers. Embedding every single one of the 6.5 eligible comments was prohibitively time-consuming and expensive (12 hours and ~$2,000). So I ended up with this extension for step 6:

- Look-up closest embeddings (stories)

- With list of top N matching stories, get embeddings for all top comments on the stories at question-answering time

- compute embeddings and similarity and choose top K comments closest to question

- Put top matching comments into a prompt and ask GPT-3 to answer the question using the context

Let's dive into details for each of the steps above.

Ingesting and filtering HN corpus

Using the firebase API, downloaded the entire ~35m item database of HN comments and stories in parallel. This took about 30 minutes and left us with 4.8m stories and 30m comments. I filtered the stories to just those with more than 20 upvotes and at least 3 comments, this left me with 400k stories over the entire 17 year history of HN. I then further filtered to stories from the last 5 years, leaving us with a final number of 160k stories. For eligible comments, I filtered to those on an eligible story with at least 200 characters, for ~6.5 million comments total.

GPT embeddings

To index these stories, I loaded up to 2000 tokens worth of comment text (ordered by score, max 2000 characters per comment) and the title of the article for each story and sent them to OpenAI's embedding endpoint, using the standard text-embedding-ada-002 model, this endpoint accepts bulk uploads and is fast but all 160k+ documents still took over two hours to create embeddings. Total cost for this part was around $70.

The embeddings were then indexed with Pinecone.

Discord bot

For a user interface, I decided to implement AskHN as a Discord bot. Given that I needed to rate limit responses, having a Discord bot (over a dedicated web app, for instance) would allow for a more fun and collaborative experience of folks sharing questions and answers, even if any given individual did not get a chance to ask a question.

Answering the question

With the embeddings indexed, and our bot live, responding to a given question involved the following steps:

- Give an immediate reply telling the user if we are working on the question or if the user has been rate limited.

- Compute the embedding of the question and query Pinecone for top three most similar stories

- Collect all top comments from all three stories, compute embeddings for all of these comments, and then rank by similarity to the question's embedding

- Concatenate most similar comments (up to max 1000 tokens per comment) until max tokens limit reached (3500)

- Build prompt with comments as context and send to GPT-3 to produce an answer

There are often dozens or even hundreds of relevant comments that we get the embeddings for, luckily embeddings are cheap and fast, so the cost of this is much less than even the single call to GPT-3 for the completion.

With the top comments text in hand we build the final prompt for GPT-3 as:

prompt = f"""{top_comments}

Answer the following question by summarizing the above comments (where relevant),

be specific and opinionated when warranted by the content:

Question: "{question}"

Answer: """

I played around with a number of prompts, but the main thing is to get GPT-3 to break out of its "Generic Helpful Internet Article" voice and take a more opinionated stance when warranted. So I told it to do that, and it did :)

Conclusion

The methodology I used here is a generic, scalable solution for distilling a knowledge corpus into an embodied intelligence. Would I use a bot like this over hn.algolia.com? Maybe, maybe not. Would I appreciate this service added onto Algolia results page? Absolutely 100%.

Just shoot me an email Algolia, kvh@patterns.app, happy to help!

Building your own question-answering bot on any corpus

This same architecture and flow would work well for almost any text corpus and question-answering bot. If you have such a use case, please feel free to reach out to me kvh@patterns.app anytime to discuss my learnings or apply for our CoDev program at Patterns. You can get started by cloning the template for this bot.