The app that I built as part of this graph can be viewed and cloned here: https://studio.patterns.app/graph/sx5yykfi7u34ut7pvae4

I'm a big fan of the search experience provided by Algolia. It's super simple to implement and comes with a ton of rich features out of the box, such as contextual search results, typo tolerance, synonyms, and filtering stop words. Since Algolia can accurately retrieve blocks of relevant content, I had an idea: why not inject this search content into OpenAI's text completion prompt and see if I could generate an Algolia-powered GPT-3 chatbot?

Accomplishing this turned out to be surprisingly simple. All I did was feed the search results into OpenAI's text completion prompt and follow that up with a question. The result was quite solid.

And here’s what my prompt looked like:

Answer:

We can then upload and publish our component to our organization with the devkit command patterns upload path/to/graph.yml --publish-component . (You may see “graph errors” when you upload a component that has an unconnected input or unfilled parameter, these are ok to ignore, since they will be connected by users of your component) Now if we run patterns list components we should see it included in the output

Click on the +Add button and select a Python node to add to your graph

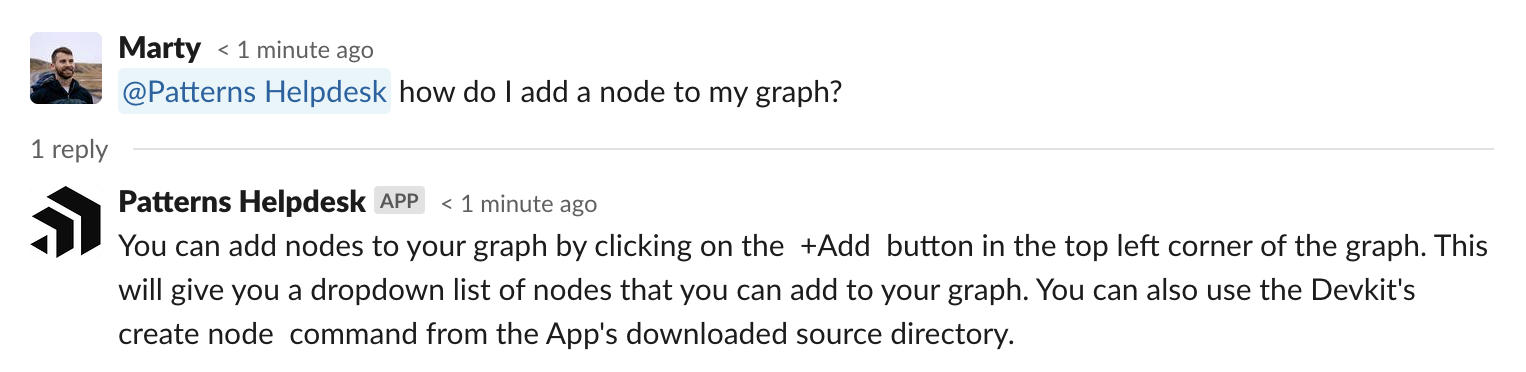

Graph's have an add button in the top left corner of the graph that provide a dropdown of Nodes that can be added to the graph. You can also use the

etc...

Question:

how do I add a node to my graph?

What's great about this solution is that OpenAI's text-davinci-002 model has a prompt limit of 2048 tokens. Therefore, it's impossible to pass our entire documents into a prompt. However, passing in search results used only around 1k tokens. You could also fine-tune your own model, but that would require a lot more work to maintain, and you run the risk of overfitting.

Be aware that 1k tokens with

text-davinci-002cost around $0.02

There were some Algolia search results that weren't relevant, such as information about GDPR and creating schemas. However, these irrelevant blocks did not affect the end result when it came to OpenAI predicting the next text completion for my question.

Passing context to OpenAI’s text completions

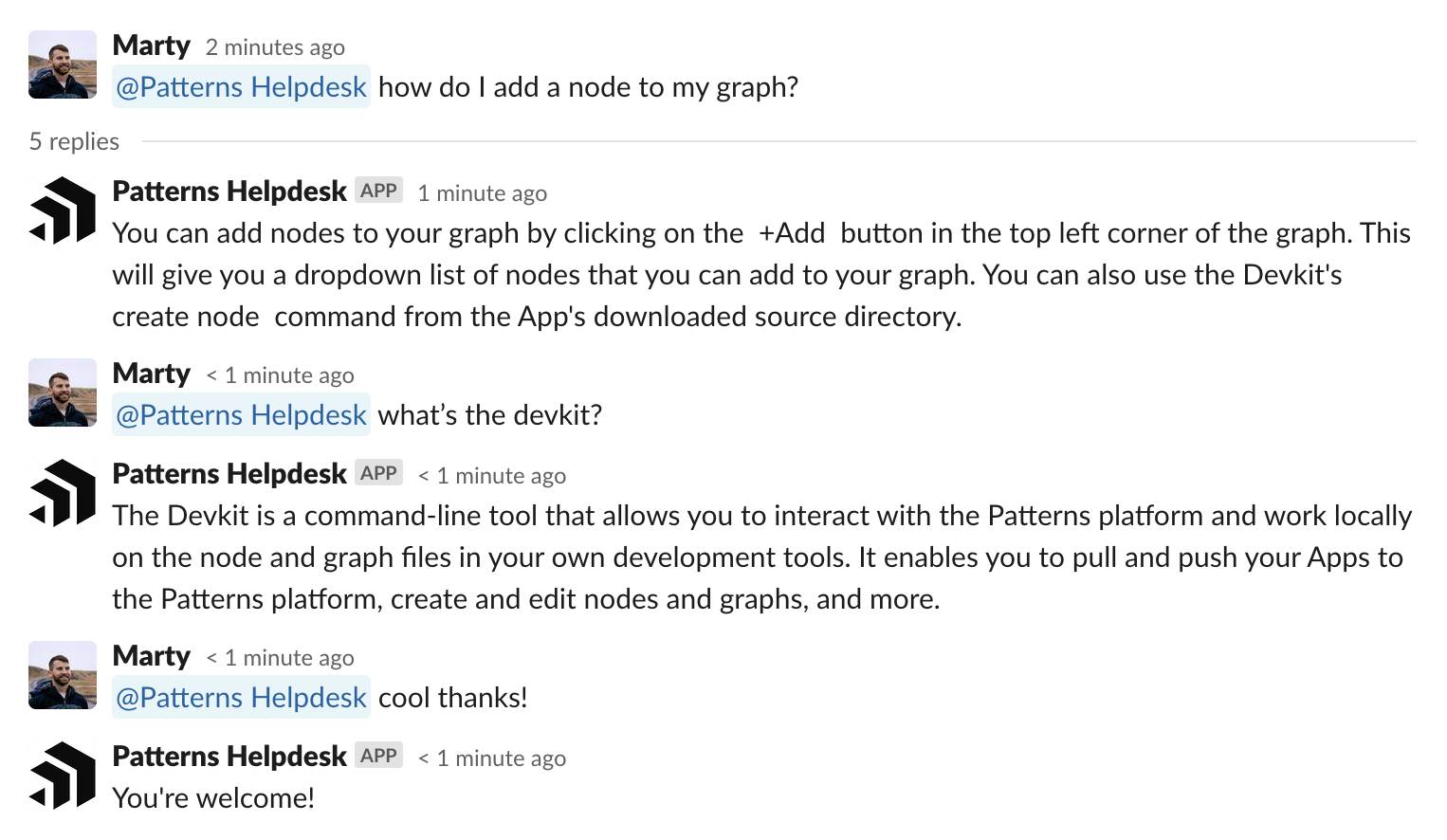

After this first part worked well, I thought it would be neat if the Slackbot could retain the context of the thread even if a non-question response or follow-up question was asked. Slack provides a unique thread ID, so I used this to fetch the entire conversation and appended it after the search results in my new prompt.

Learnings

It’s amazing how well this works, but there are still a few limitations:

- If the topic of a follow-up question is unrelated to the search results of the original question, the bot may struggle to provide an accurate response.

- Algolia search can be configured to prioritize marketing content over more helpful documentation. It's important to be mindful of how search is implemented to provide the best chat experience.

Conclusion

OpenAI's text completion API combined with text search can be a powerful tool for creating a bot that specializes in any given topic. If your organization uses Algolia and Slack, you can simply clone my graph and follow the setup instructions.